High-dimensional Inference of Large Noisy Matrices: The Old, the New, and the Unknown

Abstract

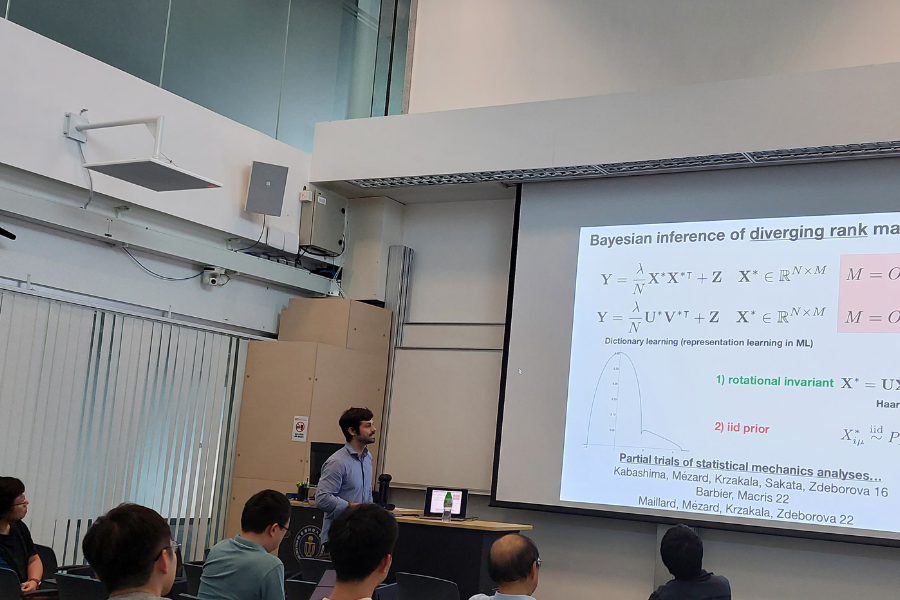

Data often comes in the form of tables. It is thus natural that the recovery of large matrices corrupted by noise is such a central theme in high-dimensional inference, with applications in machine learning and signal processing, communications, biology and neurosciences or finance. At a high-level, there exists two main regimes for the theoretical analyses: the much better understood «low-rank regime» where the clean matrix to recover has much smaller rank than the corrupted data matrix, and the challenging «high-rank regime» where they have comparable ranks. Two complementary lines of research have emerged: one, rooted in random matrix theory, is concerned with spectral properties and spectral estimators (such as principal component analysis) for matrix recovery; the other comes from statistical physics and information theory and focuses on Bayesian estimators and their limits, including approximate message-passing algorithms. The speaker will review a very biased selection of older and more recent results along these two lines, discuss the main differences between the two complementary rank-growth regimes, and present some open questions that remain a challenge for theoreticians as well as practitioners.

About the Speaker

Prof. Jean BARBIER has completed his PhD at the Ecole Normale Supérieure of Paris, France, on the application of spin glass techniques to the analysis of high-dimensional inference and error-correcting codes. During his postdoc at EPFL in Lausanne, Switzerland, his research has focused on the rigorous validation of spin glass approaches for inference and machine learning. He is now Associate Professor at the Abdus Salam International Centre for Theoretical Physics in Trieste, IT, and is a recipient of a starting grant from the European Research Council for his project on matrix inference and neural networks.

Prof. Barbier's group research interests are centered around information processing systems appearing in machine learning, signal processing or computer science. Using a mix of rigorous and physics approaches, his group often study these systems and associated algorithms using statistical physics of disordered systems, information theory, and random matrix theory, with the goal of quantifying what is the optimal performance one can aim for when processing big data, as well as how close to optimality one can operate when using computationally efficient algorithms.

For Attendees' Attention

Seating is on a first come, first served basis.